It is commonly known, that the success of a company/business/product directly depends on the customer, so if your customer likes your product it's your success. If not then you need to improvise it by making some changes to it.

Question: How will you know whether your product is successful or not?

Well for that you need to analyze your customers and one of the attributes of analyzing your customer is to analyze their sentiment of them on the specific product and this is where the sentiment analysis comes into the picture.

So, let's start with what is sentiment analysis?

Sentiment Analysis we can say it is a process of computationally identifying and categorizing opinions from a piece of text, and determining whether the writer's attitude towards the particular topic or product is positive, negative, or neutral.

It might be possible not as an individual every time you don't perform a sentiment analysis but you do look for the feedback right like before purchasing a product or downloading an app on your device(phone) either from the App Store or Play Store you can look for feedback of what other customers or users are saying about that product whether is good or bad and you analyze it manually. Consider at the company level, how do they analyze what customers are saying about particular products, they do have more than millions of customers. That is where companies need to perform sentiment analysis to know whether their products are doing good on the market or not.

What is NLTK?

NLTK stands for Natural Language Toolkit, It provides us with various text processing libraries with a lot of test datasets. Today you will learn how to process text for sentiment analysis using NLTK. There are other libraries as well like CoreNLP, spaCy, PyNLPI, and Polyglot. NLTK and spaCy are most widely used. Spacy works well with large information and for advanced NLP.

We are going to use NLTK to perform sentiment analysis to assess if a Twitter post is about Covid-19 or not. You can read more about the datasets we are going to use here

The first step is to install NLTK in your working environment

pip install nltk

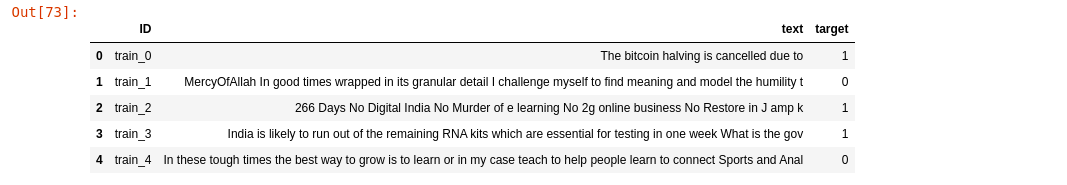

Data Loading

Our datasets have three features ID, text, and target where ID just indexes each tweet, the text is the tweet needed to be classified and the target is the label of the tweet where 1 means the tweet with Covid contents and 0 tweets with no Covid contents.

import pandas as pd

tweets = pd.read_csv("../data/tweets.csv")

tweets.head()

Overview of tweets

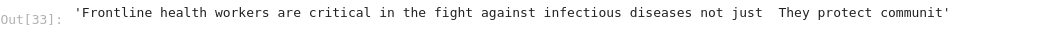

Let's try to filter some tweets one with Covid contents and another with non-Covid contents.

# %tweet with covid contents

tweets[tweets['target'] == 1 ].loc[8,'text']

# %tweet with non covid contents

tweets[tweets['target'] == 1].loc[1,'text']

Data Cleaning

The process is usually step-wise, regardless of which language of those sentiments are coming, only some changes can be done to fit the requirements of sentiment analysis but the idea of cleaning text data is the same. We have loaded the datasets it is time to clean those texts.

Removing Punctuation

Punctuation is nonmeaningful when we come in sentiment analysis we should remove from strings to remain with clean sentiments. We can do so by using remove_punctuation function on the snippet below.

# %function to remove punctuation using string library

def remove_punctuation(text):

'''a function for removing punctuation'''

translator = str.maketrans('', '', string.punctuation)

return text.translate(translator)

tweets['text'] = tweets['text'].apply(remove_punctuation)

Converting Text to Lowercase

In sentiment analysis, we should consider converting text to lowercase because they can be a problem for a couple of reasons. Imagine the world "us".It could be a pronoun representing "we" on the sentence or the country "USA". We can do so by using the piece of code below.

tweets['text'] = tweets['text'].apply(lambda x: " ".join(x.lower() for x in x.split()))

Removing Stop words

A Text may contain words like ‘is’, ‘was’, ‘when’ etc. We can remove stopwords from the text. There is no universal list of stop words in NLP, however, the NLTK library provides a list of stop words, so here we will use the snippet below to remove all stopwords from the text.

# %import nltk

import nltk

# %download stopwords using nltk

nltk.download('stopwords')

# %remove english stopwords

allstopwords = nltk.corpus.stopwords.words("english")

tweets['text'] = tweets['text'].apply(lambda x: " ".join(i for i in x.split() if i not in allstopwords))

Removing Numbers

Numbers or words combined with numbers are very hard to process, you can find a sentence with words like covid-19 , software2020 or plan7. For this can, we should remove these kinds of words be problems for machines to understand. For our case here is a piece of code to perform such a task.

def remove_numbers(text):

'''a function to remove numbers'''

txt = re.sub(r'\b\d+(?:\.\d+)?\s+', '', text)

return txt

tweets['text'] = tweets['text'].apply(remove_numbers)

Tokenization

The process of breaking down the text into smaller units is called tokens. If we have a sentence, the idea is to separate each word and build a vocabulary such that we can represent all words uniquely in a list. Numbers, words, etc.. all fall under tokens. NLTK has different methods to perform such tasks. For our case, we will use sent_tokenize to perform tokenization

nltk.download('punkt')

from nltk.tokenize import sent_tokenize

tweets['text'] = sent_tokenize(tweets['text'])

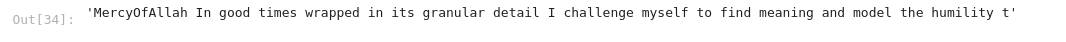

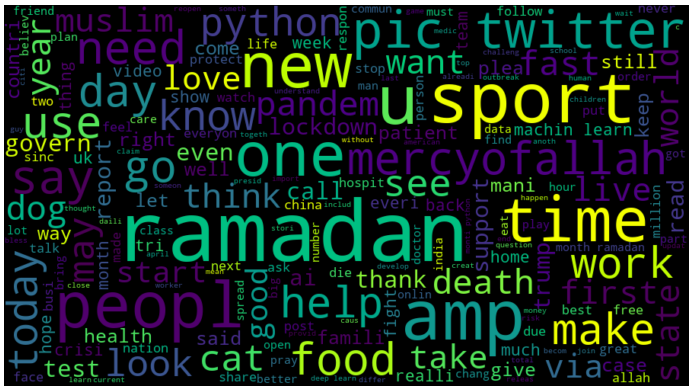

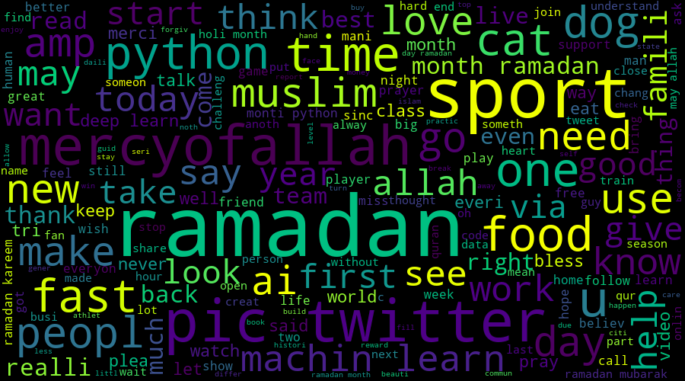

Story Generation and Visualization from Tweets

Take a look at visualizations to answer some questions or to tell stories like

- What are the most common words in the entire dataset?

- What are the most common words in the dataset for each class, respectively?

- Which trends are associated with either of the sentiments? Are they compatible with the sentiments?

We will be using a word cloud to visualize our tweets

# create text from all tweets

all_words = ' '.join([text for text in tweets['text']])

wordcloud = WordCloud(width=900, height=500, random_state=21, max_font_size=110).generate(all_words)

plt.figure(figsize=(15, 7))

plt.imshow(wordcloud, interpolation="bilinear")

plt.axis('off')

plt.show()

Word cloud Visualization for tweets

From left word cloud visualization of all tweets, at the middle the visualization of tweets about Covid and the last(right side) the visualization of tweets with non-Covid contents.

Feature Extraction and Classification

Here we are going to extract tweets and split them into two groups, train group and test group where train group will be used to train our sentiment model and the test group to validate the performance of our model, the splitting task will be done by using sklearn python library

we are going to use NLTK methods to perform feature extractions

# %Extracting word features

def get_words_in_tweets(tweets):

all = []

for (words, target) in tweets:

all.extend(words)

return all

def get_word_features(wordlist):

wordlist = nltk.FreqDist(wordlist)

features = wordlist.keys()

return features

w_features = get_word_features(get_words_in_tweets(tweets))

def extract_features(document):

document_words = set(document)

features = {}

for word in w_features:

features['containts(%s)' % word] = (word in document_words)

Then, Let's use NLTK Naive Bayes Classifier to classify the extracted tweet word features.

from nltk.classify import SklearnClassifier

training_set = nltk.classify.apply_features(extract_features,tweets)

classifier = nltk.NaiveBayesClassifier.train(training_set)

The final step is to evaluate how far our trained model can perform sentiment classification with unseen tweets.

noncovid_cnt = 0

covid_cnt = 0

for obj in test_nocovid:

res = classifier.classify(extract_features(obj.split()))

if(res == 0):

noncovid_cnt = noncovid_cnt + 1

for obj in test_covid:

res = classifier.classify(extract_features(obj.split()))

if(res == 1):

covid_cnt = covid_cnt + 1

print('[Non Covid]: %s/%s ' % (len(test_nocovid),noncovid_cnt))

print('[Covid]: %s/%s ' % (len(test_covid),covid_cnt))

The final model with NLTK was able to predict collect 530 tweets with Non-Covid contents out of 581, equivalent to 91%, and 410 tweets with Covid contents out of 477, equivalent to 85%.

Well done, now you are familiar with NLTK that allows you to process text, analyze text to gain particular information, you can investigate more on how to make your model improve also you can opt to select other classifiers to compare with Naive Bayes.

Thank you, you can access full codes here

Relationship Between Neurotech and Natural Language Processing(NLP)

Natural Language Processing is a powerful tool when your solve business challenges, associating with the digital transformation of companies and startups. Sarufi and Neurotech offer high-standard solutions concerning conversational AI(chatbots). Improve your business experience today with NLP solutions from experienced technical expertise.

Hope you find this article useful, sharing is caring.